How to Know If the Video You’re Watching Was Made With AI

This post is part of Lifehacker’s “Exposing AI” series. We’re exploring six different types of AI-generated media, and highlighting the common quirks, byproducts, and hallmarks that help you tell the difference between artificial and human-created content.

AI companies are excited about video generators: They tout the creative possibilities these new models offer, and relish in how impressive the end results can be. From my view, however, a technology that allows anyone to create realistic videos with a simple prompt isn't fun or promising, but terrifying. Do you really want to live in a world in which any video you see online could have been created out of thin air with AI? Like it or not, it's where we're headed.

When you give bad actors the tools to manipulate videos to a degree that many, if not most, people will believe are real in passing, you're throwing gasoline on a fire that's been burning since the first person lied on the internet. It's now more important than ever to be vigilant about what we see online, and take a critical eye to any videos that purport to represent reality—especially when that reality is meant to provoke us or influence our outlook of the world.

AI videos aren't all the same

There are really two kinds of AI videos to watch out for right now. The first are videos fully generated by AI models, entire sequences that use no real footage and never existed before being produced. Think OpenAI's Sora model, which is capable of rendering short yet high-quality videos that could easily trick people into thinking they're real. Luckily for us, Sora is still in development, and isn't yet available to the public, but there are other tools those in the know can use to generate these videos from scratch.

What's more relevant at this point in time, and more concerning for short-term implications, are videos altered by AI. Think deepfakes: real videos that use AI to overlay one person's face on top of another, or to alter a real face to match manipulated audio content.

We'll cover ways to spot both types of AI video content: As AI video generators get better and become more accessible, you may start to see these videos appear online the same way AI images have blown up. Stay vigilant.

How AI video generators work

Like other generative AI models, AI video generators are fed a huge amount of data in order to work. Where AI image models are trained on individual images and learn to recognize patterns and relationships on static pieces, AI video generators are trained to look for the relationship between multiple images, and how those images change in sequence. A video, after all, is simply a series of individual images, played back at a speed that creates the illusion of movement. If you want a program to generate videos out of nowhere, you not only need them to be able to generate the subjects in those videos, but to know how those subjects should change frame to frame.

Deepfake programs are specifically trained on faces, and are designed to mimic the movements and emotions of the video they're overlaying. They often use a generative adversarial network (GAN), which sets two AI models against each other: one that generates AI content, and another that tries to identify whether that content is AI-generated. On the other hand, a model like Sora is, in theory, able to generate video on just about anything you can ask it to. Sora is what's known as a diffusion model, which adds "noise" (really static) to training data until the original image is gone. From here, the model will try to create a new version of that data from the noise, which trains it to create new content from scratch.

It's still early days for total AI video generation, and while deepfake tech is good, it's not great. There are limitations here that might not be present in future iterations of these technologies, but as of today, there are clues you can look for to tell whether that video you're watching is actually real, or something manipulated.

The faces don't look quite right

The technology to overlay one person's face on top of another is impressive, but it's far from perfect. In many (if not most) cases, a deepfake will have obvious signs of forgery. Often, it looks like a mediocre photoshop: The face won't blend into the rest of the person's head, the lighting doesn't match the scene its set in, and the whole thing has an uncanny valley effect to it.

If you're watching a video of a notable person saying or doing something controversial, really look at their face: Is it possible AI has played a part here? This video of "President Obama" saying ludicrous things shows off some of the flaws. This deepfake was made six years ago, but demonstrates some of the notable visual flaws this type of AI altered video is known for:

The mouths don't match the speech

Likewise, another flaw with current deepfake technology is how it struggles to match the fake face's mouth movements to the underlying speech—especially if the speech is artificial as well.

Take a look at this deepfake of Anderson Cooper from last year: The fake face is more realistic than the video of Obama above, but the lip movements do not match the speech they've given AI Anderson:

So many of the deepfakes circulating social media are so poorly made, and are obvious AI slop if you know what you're looking for. Many people don't, so they see a video of a politician saying something they don't like and assume it is true—or are amused enough not to care.

Look for glitches and artifacts

Like AI image generators, AI video generators produce videos with odd glitches and artifacts. You might notice the leaves in a tree flickering as the camera moves towards them, or people walking in the background at a different frame rate than the rest of the video. While the video below appears realistic on first glance, it's full of these glitches, especially in the trees. (Also, notice how the cars on the road to the left constantly disappear.)

But the worst of the bunch? Deepfakes. These videos often look horrendous, as if they've been downloaded and reuploaded 1,000 times, losing all fidelity in the process. This is on purpose, in an attempt to mask the flaws present in the video. Most deepfake videos would give themselves away in an instant if they were presented in 4K, since the high resolution video would highlight all of their aforementioned flaws. But when you reduce the quality, it becomes easier to hide these imperfections, and, thus, easier to trick people into believing the video is real.

The physics are off

A video camera will capture the world as it is, at least as the camera's lens and sensor are able to. An AI video generator, on the other hand, creates videos based on what it's seen before, but without any additional context. It doesn't actually know anything, so it fills in the blanks as best as it can. This can lead to some wonky physics in AI generated video.

Sora, for example, generated a video of a church on a cliff along the Amalfi Coast. At first glance, it looks pretty convincing. However, if you focus on the ocean, you'll see the waves are actually moving away from shore, in the opposite direction they should be moving.

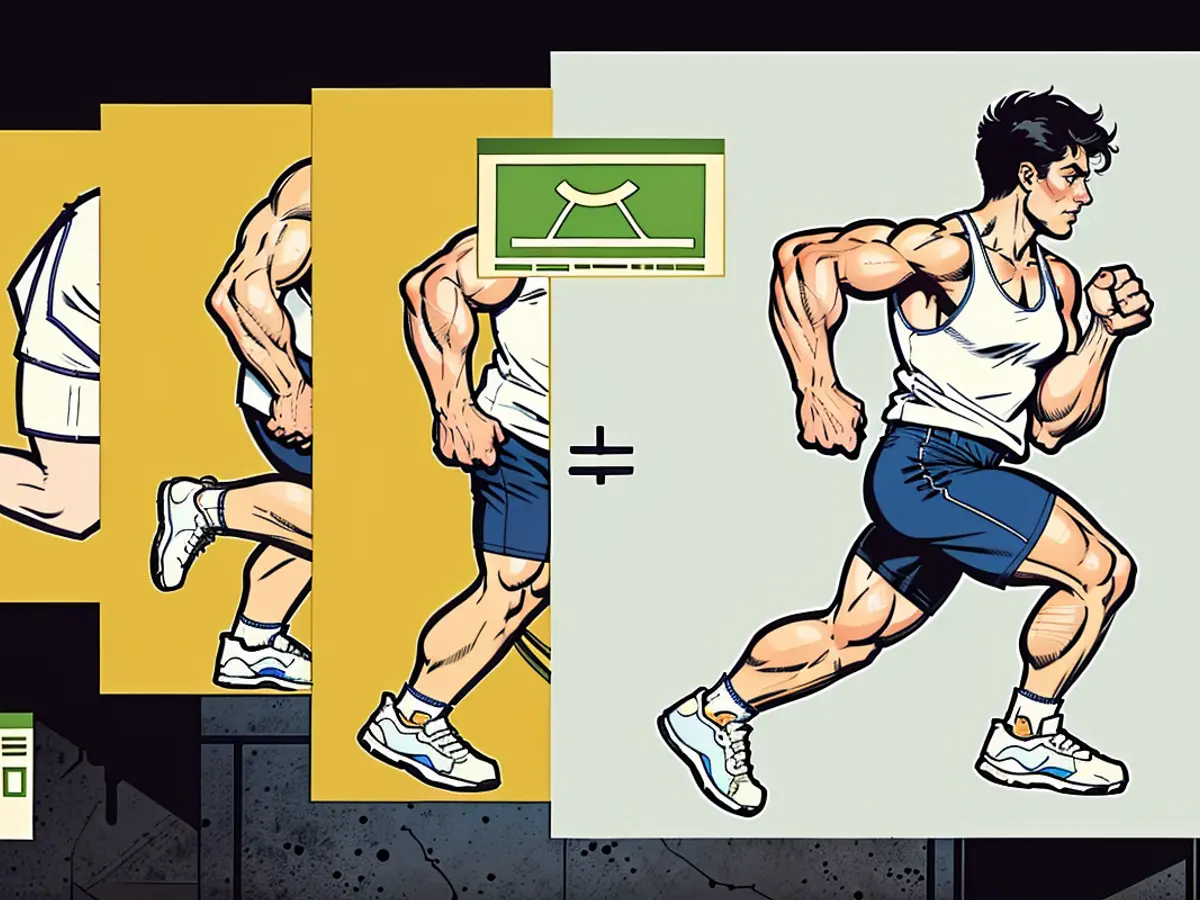

The generator also produced a surface-level convincing video of a man running on a treadmill. The big tell here is that the man is running "forward" while facing away from the treadmill, as the model doesn't understand exactly how treadmills are supposed to work. But looking closely, you can see the man's stride isn't normal: It's as if the top half of his body stops every now and then, while the bottom half keeps going. In the real world, this wouldn't really be possible, but Sora doesn't actually understand how running physics should work.

In another video, "archaeologists" discover a plastic chair in the sands of the desert, pulling it out and dusting it off. While this is a complicated request for the model, and it does render some realistic moments, the physics involved with the entire endeavor are way off: The chair appears out of thin air, the person holding it carries it in a way no person ever would, and the chair ends up floating away on its own, eventually distorting into something else entirely by the end of the clip.

There are too many limbs

The AI models producing this video content don't understand how many limbs you're supposed to have. They make the connection that limbs move between frames, but don't quite grasp that its supposed to be the same limbs throughout the scene.

That's why you'll see arms, legs, and paws appearing and reappearing throughout a video. While it doesn't happen all the time, you can see it in this Sora video: As the "camera" tracks the women walking forward, there's a third hand that bobs in front of her, visible between her left arm and her left side. It's subtle, but it's the kind of thing AI video generators will do.

In this example, look very closely at the cat: Towards the end of the clip, it suddenly generates a third paw, as the model doesn't understand that sort of thing generally doesn't happen in the real world. On the flip side, as the woman rolls over in bed, her "arm" seems to turn into the sheets.

Things just don't make sense

Extra limbs don't make a whole lot of sense, but it's often more than that in an AI video. Again, these models don't actually know anything: They're simply trying to replicate the prompt based on the dataset they were trained on. They know a town in the Amalfi Coast should have plenty of stone staircases, for example, but they don't seem to understand those staircases have to lead to somewhere. In OpenAI's demo video, many of these stairs are placed haphazardly, with no real destination.

In this same video, watch the "people" in the crowd. At first, it might look like a bunch of tourists strolling through town, but some of those tourists disappear into thin air. Some look like they're walking downstairs, only they're not using the staircases to nowhere: They're simply "walking downstairs" on the level ground.

Look, it's been important to take the things you see on the internet with a grain of salt for a while now. You don't need AI to write misleading blog posts that distort the truth, or to manipulate a video clip to frame the story the way you want. But AI video is different: Not only is the video itself manipulated, the whole thing might never have happened. It's a shame that we have to approach the internet (and the wider world) so cynically, but when a single prompt can produce an entire video from nothing, what other choice do we have?

In the realm of tech advancements, the ability to create realistic AI videos using simple prompts has sparked both excitement and concern. It's a double-edged sword, as tools like the Sora model can generate high-quality videos that could potentially deceive viewers.

With the rise of AI video generators, it's crucial to stay vigilant and critically evaluate any video content claiming to represent reality, especially if it aims to provoke or influence our perspectives. This is especially true as AI becomes more accessible and we start seeing these video manipulations appear on the internet in larger numbers.